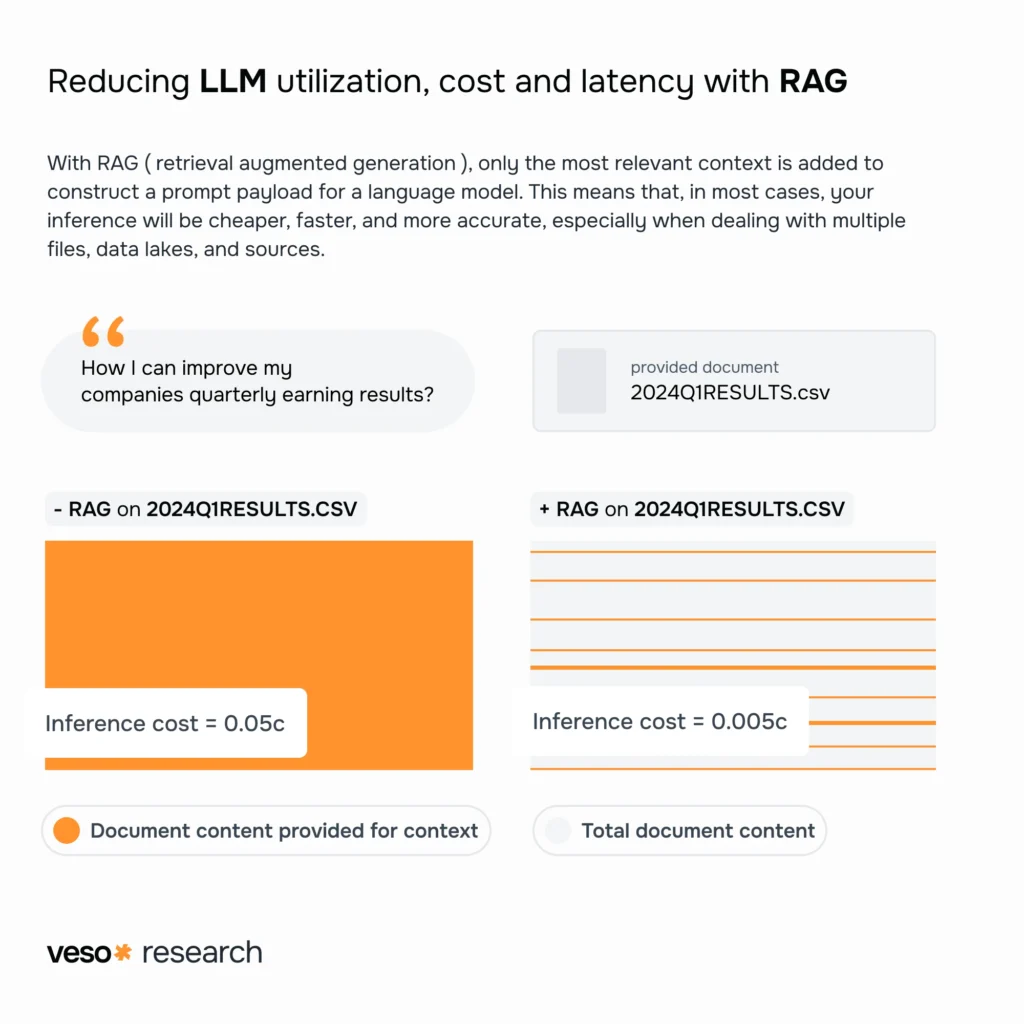

With RAG ( retrieval augmented generation ), only the most relevant context is added to construct a prompt payload for a language model. This means that, in most cases, your inference will be cheaper, faster, and more accurate, especially when dealing with multiple files, data lakes, and sources.